Hey Lizeth,

I am afraid that, without more information, I cannot give a definite answer. Please allow me to elaborate:

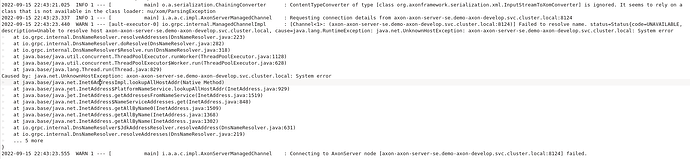

OpenShift, which you might irreverently call Kubernetes-with-extras, deploys containers in Pods. These Pods tend to be a bit limited in their lifetime management, so normally you put a controller on top of it, from the simplest, a Deployment, to something as complex as a StatefulSet. Because Axon Server needs PersistentVolumes that are tightly locked to that particular Pod (so you don’t lose your Event Store’s files) and a clear and predictable network identity, we recommend you use a StatefulSet. This is all described in a series of Blog posts under the collective name “Running Axon Server” (Part 1, part 2, part 3, and part 4. Especially parts 2 and 4 are relevant here) with an associated set of example scripts and deployment descriptors on GitHub.

In the context of your example, this will mean that a StatefulSet named “axon-axon-server-se” (if that is what you used) would have a Pod named “axon-axon-server-se-0” for its first (and only) replica. If you used a Deployment or some other controller instead, it will suffix the name of the controller with a dash and some random letters or digits. This shows the predictability of the name for the StatefulSet by the way, but you still get something more than the simple name you started with.

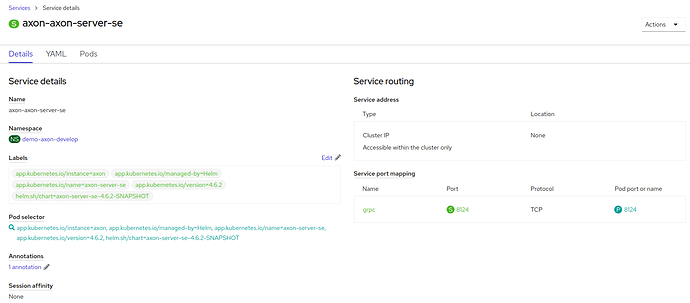

You can further get a DNS name by creating a so-called headless Service which makes Kubernetes expose specific ports to other namespaces and Pods. However, these Services are dependent on the Pod’s health. If the Pod is not healthy, no DNS entry is created, and no data is passed on.

This is very different from Docker, where you can communicate freely between containers as long as they are on the same Docker network.

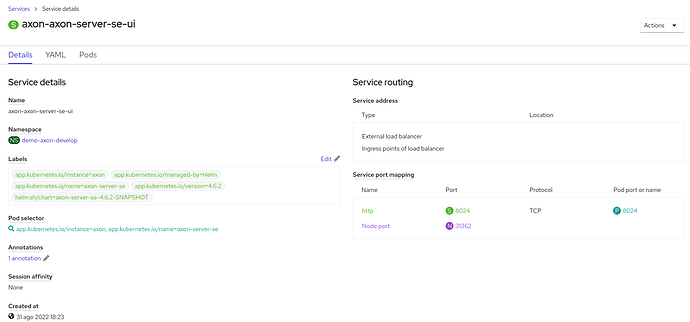

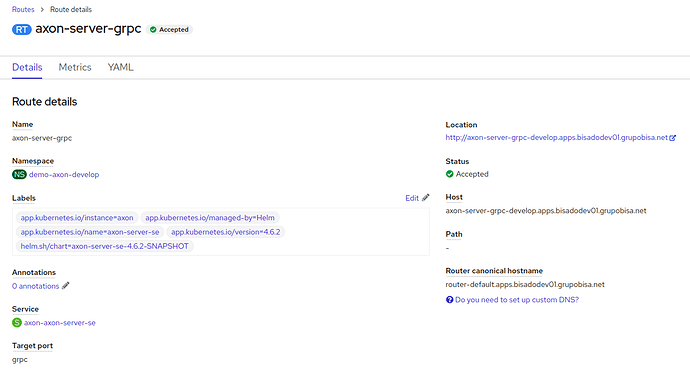

I must admit I have very little experience with OpenShift’s special components, such as Routes, but as far as I remember, they were meant for exposing services in OpenShift to applications outside. So, concluding, can you tell me a bit more about your application’s software architecture? How is it deployed? What Services do you have? Is Istio or some other service mesh in use, and did you annotate the Service with “appProtocol: grpc”?

Cheers,

Bert Laverman