Hello

I ran into a weird situation. We have multiple sagas running in a multi node Kubernetes setup with PooledStreaming configuration.

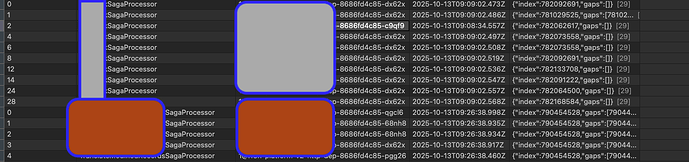

One of the saga processors is not able to update/write to the token_entry table. Attaching screenshots for reference. Please check the timestamp for the processor that’s on the top of the screenshot, it’s behind compared to the processor that’s on the bottom. The saga is not able to process any events

Other issue is when I try to see what segments are present in the owner pod for that specific saga, the “EventProcessingConfiguration” returns no segments at all. I was doing this to release the segments for that pod. Attaching the screenshot for this too.

![]()

Our Saga Configuration is

Function<String, ScheduledExecutorService> coordinatorExecutorBuilder =

name -> Executors.newScheduledThreadPool(

1,

Thread.ofVirtual().name("[PSP] Coordinator - " + name, 0).factory()

);

Function<String, ScheduledExecutorService> workerExecutorBuilderExtended =

name -> Executors.newScheduledThreadPool(

100,

Thread.ofVirtual().name("[PSP] Worker extended - " + name, 0).factory()

);

EventProcessingConfigurer.PooledStreamingProcessorConfiguration pspConfigExtended =

(config, builder) -> builder

.coordinatorExecutor(coordinatorExecutorBuilder)

.workerExecutor(workerExecutorBuilderExtended)

.initialSegmentCount(2)

.batchSize(100)

.tokenClaimInterval(5000)

.claimExtensionThreshold(10000)

.enableCoordinatorClaimExtension();

I tried restarting the pods, deleting the pods, doing a complete fresh deployment. All these solutions work only till the processor handles 100-200 events, it’s getting stuck again. Not sure why.

Any help would be greatly appreciated. Thank you