As I sit down to write this question I find it hard to not go in a million different directions. I have many questions about how exactly my events should be modeled. That hasn’t stopped me from forging ahead, but I’m often questioning my decisions. The part of our domain that I have built so far is a data input intensive part. It is comprised of two aggregates. One of them is more complex than the other, having a handful of entities and value objects that it manages. I have built my commands and events around the main creation and correction use cases for the aggregates and their related state. So far, the data of both commands and events parallel one another.

For example, I have a command to create a new instance of a Package aggregate, I call this command CreateHeader. It looks something like this:

class CreateHeader { String id; enum type; enum subType; enum[] flags; ... }

When the Package aggregate handles this command successfully it applies the following domain event to the event stream:

class HeaderCreated { String id; enum type; enum subType; enum[] flags; ... }

Then I have a command to correct the Package header details that looks something like this:

class CorrectHeader { String id; enum type; enum subType; enum[] flags; ... }

So then you can probably guess what happens when the Package aggregate successfully processes the header correction command…it applies the following event to the stream:

`

class HeaderCorrected {

String id;

enum type;

enum subType;

enum[] flags;

…

}

`

This basic approach has been followed with the handful of other domain concepts we have implemented so far. It has been fairly effective at addressing the simple use cases we have taken on to date, but I’m not super happy about it. I’m bothered about how closely the events parallel the commands. It just feels a bit naive and seems like it could easily lead to long term maintenance issues. I guess I’m just a bit nervous because this is my first Event Sourced application and I understand that modeling events is the crux, because once an event is persisted it will always need to be supported in the published form (I do understand the role of upcasters, but would love to avoid them as much as possible). I know it’s hard to talk about modeling events outside of the specifics of the domain, but are there any best practices that can be applied here?

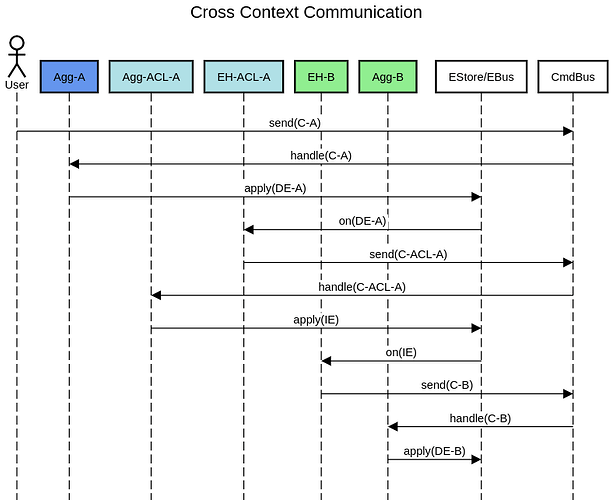

Switching gears a little, I’m also curious about the best way to handle milestone or integration events. I don’t know exactly what to call them, but what I’m talking about is a class of events that are not part of the domain state (they don’t belong in the aggregate’s event stream), they exist to communicate across bounded contexts. Considering the domain events described above, both the header created and header corrected events define an array of enum values called “flags”. One of the flag enum values is RESEARCHABLE. In our domain we have a research context and whenever a package’s RESEARCHABLE flag is toggled, this context wants to know about it. So I have created a ResearchabilityToggled event, but I’m not sure how I should raise this event. One option could be to raise this event from within the Package’s query side updater, which is an event handler service that listens for all of the Package aggregate domain events, in order to update the query side state. It seems like this service could be responsible for publishing integration events on the Event Bus, but I’m not sure if this is the right way to handle it.

Thanks for any input!

Troy